Healthcare professionals around the world are witnessing a remarkable transformation as artificial intelligence reshapes medical diagnostics. While AI promises faster, more accurate diagnoses, it’s not immune to errors that can impact patient care. This article explores common AI diagnostic mistakes and offers practical solutions that healthcare providers can implement today, empowering clinicians to harness AI’s benefits while avoiding potential pitfalls.

Musumeci Online – The Podcast. It is perfect for driving, commuting, or waiting in line!

The Promise and Perils of AI in Medical Diagnostics

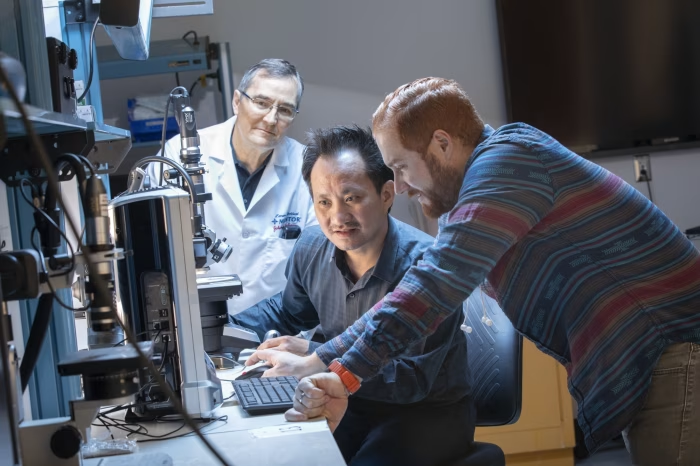

The medical community stands at the dawn of a technological revolution. AI systems analyze medical images, predict disease progression, and recommend treatments with increasing sophistication. These tools offer tremendous potential to enhance diagnostic accuracy and improve patient outcomes when properly implemented.

Healthcare facilities across the globe have embraced AI technologies with enthusiasm, hoping to reduce human error and accelerate diagnostic processes. The allure is understandable – AI never experiences fatigue, can process vast amounts of medical data in seconds, and learns continuously from each case it encounters.

However, beneath this promise lies a more complex reality. The same AI systems designed to prevent medical mistakes can sometimes introduce new forms of error. Understanding these limitations doesn’t diminish AI’s potential, but rather helps establish realistic expectations and appropriate safeguards for its clinical use.

Common AI Diagnostic Failures: Real-World Examples

The healthcare landscape has already witnessed several high-profile AI diagnostic shortcomings that serve as valuable learning opportunities. IBM Watson for Oncology, designed to recommend cancer treatments, faced criticism when it suggested unsafe and inappropriate interventions. Investigation revealed the system had been trained on non-representative data that failed to capture real-world clinical scenarios.

Google Health‘s ambitious system for diabetic retinopathy screening demonstrated impressive accuracy in controlled settings but struggled to maintain performance in actual clinical environments. The gap between laboratory and real-world performance highlights the challenges of developing AI systems that work reliably across diverse healthcare settings.

Perhaps most concerning are the subtle errors that can easily go undetected. Researchers discovered that minor alterations to medical images-changes imperceptible to the human eye-could dramatically affect AI interpretations. In one striking example, simply modifying a few pixels in an image of a benign skin lesion caused an AI system to misclassify it as malignant. Even rotating an otherwise unchanged scan led to incorrect diagnoses.

Why AI Makes Diagnostic Mistakes

The diagnostic errors in AI systems stem from several interconnected factors. At their foundation, many AI tools suffer from training data limitations – they perform well on the specific populations they were developed with but struggle when encountering different demographic groups. MIT researchers found that AI models showing the highest “fairness gaps” were precisely those most adept at detecting demographic information from images, suggesting they rely on problematic shortcuts in their decision-making.

The language describing symptoms can profoundly influence AI diagnostic outcomes. Systems have produced entirely different diagnoses when presented with synonymous terms like “back pain” versus “lumbago,” despite these terms referring to identical conditions. This sensitivity to wording introduces inconsistency that human clinicians typically avoid through their contextual understanding.

Another troubling phenomenon occurs when clinicians interact with AI systems. A study revealed that when radiologists were presented with incorrect AI feedback suggesting no abnormality existed, their false negative rate jumped from 2.7% to as high as 33%. Similarly, when AI incorrectly indicated abnormalities where none existed, false positives rose from 51.4% to over 86%. This “appeal to authority” effect demonstrates how even experienced clinicians can be swayed by automated systems they perceive as authoritative.

The Real-World Impact on Patient Care

The consequences of AI diagnostic errors extend far beyond theoretical concerns. Medical errors already cost the American healthcare system approximately $20 billion annually and contribute to between 100,000 and 200,000 patient deaths each year. Poorly implemented AI could potentially exacerbate this problem rather than alleviating it.

When AI systems make mistakes, the ripple effects can compromise patient safety in several ways. Delayed diagnoses might postpone critical treatments, while false positives can lead to unnecessary procedures, patient anxiety, and wasted healthcare resources. The financial implications affect the entire healthcare ecosystem – from individual patients facing extra costs to institutions bearing the financial burden of error-related complications.

The relationship between clinicians and technology adds another dimension to this challenge. A multidisciplinary study from the University of Michigan revealed AI’s dual nature in clinical settings – while standard AI models showed modest improvements in clinicians’ diagnostic accuracy (increasing by 4.4% compared to baseline scenarios), inaccurate AI guidance could lead healthcare professionals astray from their initially correct conclusions.

Practical Solutions for Clinicians Working with AI

Despite these challenges, healthcare professionals can adopt several strategies to maximize AI benefits while minimizing risks. The first step involves approaching AI as a collaborative tool rather than an infallible oracle. Successful implementation means understanding AI as an assistant that enhances human judgment rather than replaces it.

Creating effective information gathering systems represents one promising approach. AI can help streamline data retrieval, reducing cognitive overload by automating the collection of relevant patient details. This allows clinicians to focus their mental energy on interpretation and decision-making rather than manual information assembly.

Clinical decision support systems powered by AI offer real-time insights while helping clinicians avoid common cognitive biases. The key lies in designing these systems to present multiple diagnostic possibilities with appropriate confidence levels rather than single, seemingly definitive answers. This approach preserves the clinician’s critical thinking role while benefiting from AI’s pattern recognition capabilities.

Building Better AI Diagnostic Systems

The future of AI in healthcare depends on developing more robust systems through several complementary approaches. Hierarchical screening processes represent one innovative solution, using AI tools at multiple levels to improve diagnostic accuracy. For instance, AI language models can extract diagnostic labels from free-text sources like radiology notes, improving code accuracy for downstream applications.

More sophisticated approaches like the Symptom-Disease Pair Analysis for Diagnostic Error (SPADE) tool identify potential diagnostic errors by connecting symptoms identified during one healthcare encounter with diagnoses made in subsequent visits. This creates a temporal map that can highlight missed diagnostic opportunities.

Perhaps most importantly, clinicians themselves must be integrated as key stakeholders across the development process. AI should be viewed not as a replacement for medical expertise but as a tool that supports better, faster decisions while enhancing patient outcomes. This human-centered approach ensures technology serves medicine’s fundamental goals rather than introducing new complications.

The Path Forward: Balancing Innovation with Safety

The story of AI in medical diagnostics continues to unfold, presenting both extraordinary opportunities and significant challenges. Forward-thinking healthcare organizations are establishing continuous feedback loops where AI systems and human experts learn from each other. These learning environments allow for the identification of AI weaknesses while simultaneously addressing knowledge gaps in clinical practice.

Regulatory oversight will play a crucial role in this evolution. The FDA and similar agencies worldwide have begun developing frameworks for evaluating and monitoring AI in healthcare, though much work remains to create standards that balance innovation with patient safety. Successful regulation must be flexible enough to accommodate rapidly evolving technology while ensuring appropriate safeguards.

The most promising vision for the future integrates AI seamlessly into clinical workflows, where it serves as a valuable ally that handles routine tasks, flags potential concerns, and provides decision support without replacing the irreplaceable human elements of healthcare – empathy, judgment, and the therapeutic relationship between provider and patient.

Leave a Reply